This blog post is a summary of an excellent talk titled “Safety-First: How To Develop C++ Safety-Critical Software”. The talk was given by Andreas Weis at CppNow 2023.

Andreas Weis works for Woven by Toyota, where he builds modern software for use in safety critical systems in the automotive space. He is also one of the co-organizers of the Munich C++ User Group, which allows him to share this passion with others on a regular basis.

The 3Laws team was so impressed with Andreas’s talk – and how it elucidates the complex standard that is ISO 26262 – that we are reproducing it here (including a few visuals from the talk) so that more people can enjoy its contents.

In his talk, Andreas cites two books as inspiration: The Pragmatic Programmer and The Software Craftsman. Here, we break down his talk into four sections:

- What is (functional) safety?

- What are the regulations around functional safety?

- A tour of the ISO safety standard for software

- Using C++ in a safety-critical environment

- Watch the full talk!

From here onwards, to do justice to his compelling talk, we present this blog in the style of Andrew’s talk.

What is (functional) safety?

In each domain, safety has its own unique definition. The computer science definition is that “something bad does not happen.”

Functional safety is a specific type of safety. In the automotive space, functional safety is described in ISO 26262. To understand functional safety, you need a few definitions from this standard:

- Harm is defined as physical injury or damage to the health of humans.

- Risk is the probability of the occurrence of harm weighted by the severity

- Unreasonable risk is risk that is unacceptable according to societal moral concepts.

With this, functional safety is described as the absence of unreasonable risk.

Electronic systems can fail for various reasons, due to systematic faults or even random hardware failures. The goal of functional safety is to reduce the risk resulting from such faults. Preventing the fault is just one way to achieve this.

What are the regulations around functional safety?

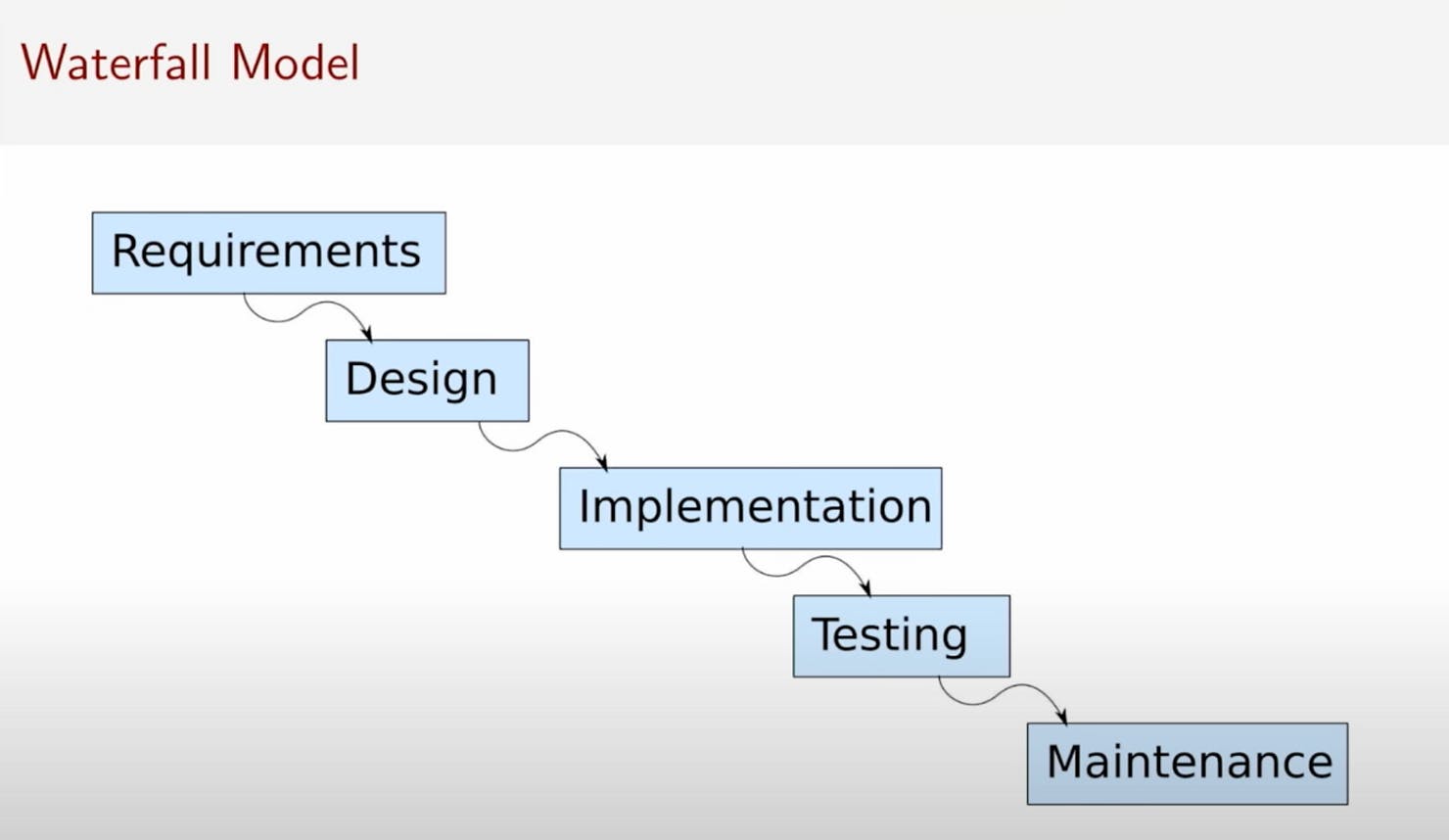

The waterfall model is an old methodology for software development, going from requirements to design to implementation to testing to maintenance. This is no longer very practical, as it’s very difficult up-front and hard to maintain. Moreover, if you are running short on time and need to deliver, testing is the first thing that is cut.

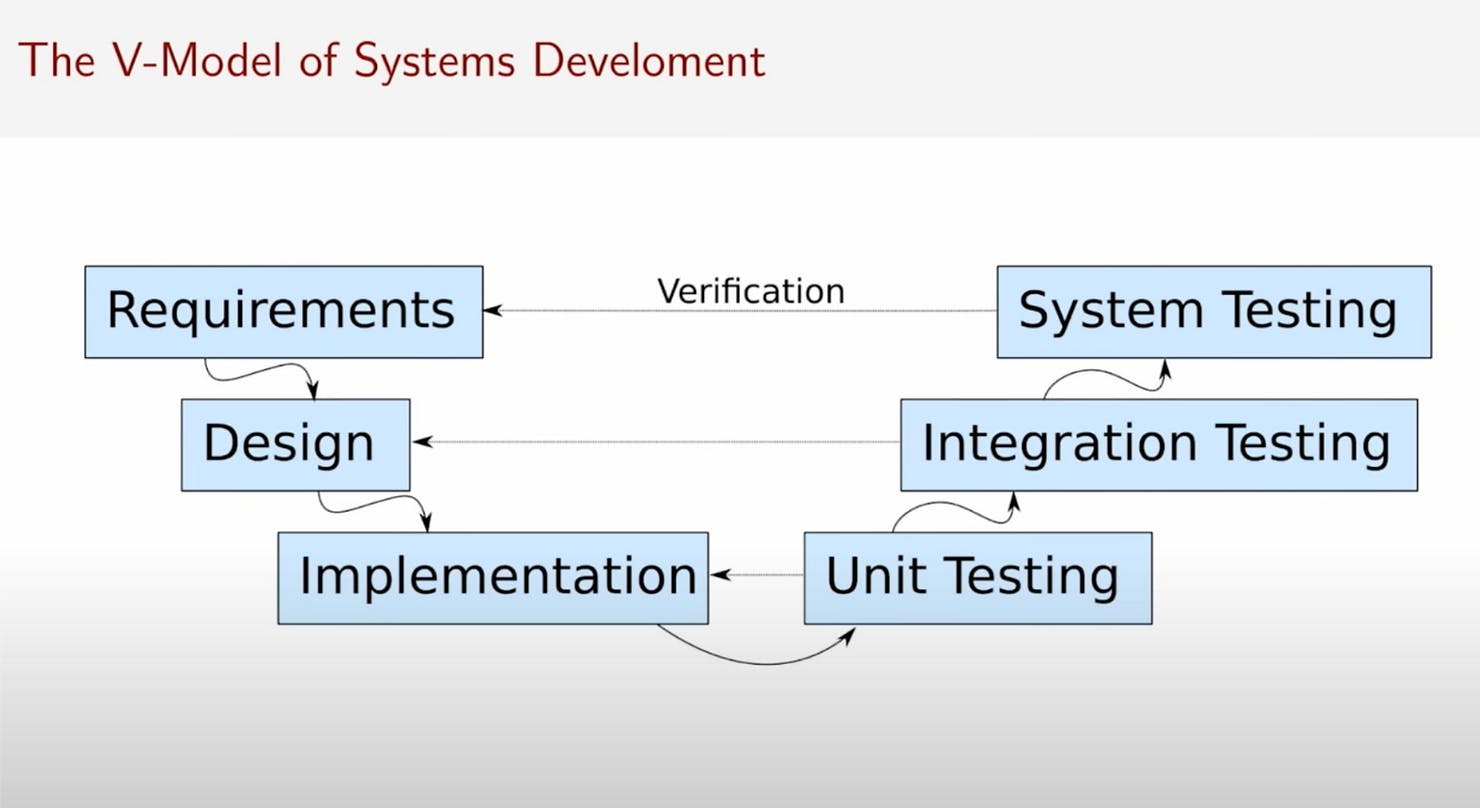

Functional safety standards want you to follow the V-model of systems development, shown below, which was developed in the 1980s. With this model, with each step from the waterfall method, you are doing testing and verification.

In modern development, the phases are not as strict, and there is a lot more overlap between standards, but it is still quite different from the agile method. While it can be slower than agile for development, the rigor is useful for regulatory and safety aspects.

A tour of the ISO safety standard for software

The development life cycle for ISO 26262 looks like this:

- Hazard analysis to identify safety goals (HARA).

- Develop functional safety concept for the system

- Develop technical safety concept as input to hardware and development phases

- Give exact definitions of outputs and objectives for each phase

- Requires traceability between phases across the entire development

The steps of completing the HARA include:

- Identify hazardous events on the level of vehicle functions

- Each hazard will have an associated severity and likelihood.

- Calculate risk from the severity * likelihood

- Calculate the ASIL (automotive safety integrity) level from the risk * controllability. Controllability in this case refers to the ability to recover from a hazard

- The outcome of the HARA is a list of safety goals with corresponding ASIL levels

The ASIL level determines the level of rigor required in addressing the safety goal.

The highest level of design for the functional safety concept includes

- Deriving from the safety goals a list of functional safety requirements

- Requirements inherit ASIL from the safety goal

- A single requirement may be associated with multiple safety goals

The strategies for achieving safety goals first includes trying to avoid the fault. This is always the best option, but not always practical. The next best option is to detect the fault when it happens and control its impact. In this way, you need to design the system to be fault-tolerant, which requires timing analysis, redundancy, and diversity. You can also increase the controllability of a system by giving the operator warnings. Lastly, you can define an emergency fallback state, that the system can go towards to keep it safe in the event of faults.

The technical safety concept has its own development phases:

- Define the concrete safety mechanisms that will be responsible for fulfilling the safety requirement. (a)Technical safety requirements take into account concrete details of the implementation. (b)Technical safety requirements need to be testable

- Describe the functionality of mechanisms including timing and tolerance properties

- Input from the system level into the actual software development process.

Up to this point, the safety of the product has mostly been defined at the hardware and operational level. Now, it is time to look at the software safety requirements. These are derived from the technical safety concept. This describes how software implements the safety-related functionalities. The requirements get reviewed extensively in the process of developing and subsequently certifying a software system.

At the software design phase, the verifiability of the resulting system is extremely important. Not only must it fulfill the system requirements, but you have to be able to prove that it can. The software architecture breaks the system into smaller components that can be developed. Here, the architects play a vital role in supporting the verification and implementation efforts.

The properties of a good architecture, as described by the ISO, include

- Avoidance of systematic faults

- Comprehensible, simple, and verifiable

- Broken down into small, self-contained pieces

- Clearly defined responsibilities

- Encapsulation of critical data

- Maintainability

To achieve this architecture, it is critical to write documentation and extensive unit testing. There needs to be an analysis of dependent failures, and you need to generate evidence of suitability of the design to address safety requirements, as well as resource usage and timing constraints.

Now, it’s time to actually “write” software. However, a lot of safety-critical code is auto-generated from an architectural design model, like MATLAB’s Simulink. This is becoming less popular, as systems and state machines grow more complicated. The code heavily relies on the specification formed in earlier phases. The main goal of the code is to be consistent with these specifications, and it should be simple, readable, comprehensible, robust to errors, suitable for modification, and verifiable.

Design standards from ISO 26262

The design principles given by ISO 26262 are quite vague, and very difficult to follow. They include

- Single-entry, single-exit

- No dynamic objects

- No uninitialized variables

- No global variables

- No variables of the same name

- No pointers

- No hidden data flow or control flow

- No recursion

These are often misunderstood to be hard and fast rules, but this is not the case. Taken in this context, these restrictions are overly restrictive on your ability to create quality software. The important thing is to recognize the potential dangers of your software environment, and to use relevant coding guidelines that give more specific instructions on software development.

Using C++ in a safety-critical environment

The ISO also gives multiple requirements for programming languages, but again, most languages (including C++) do not meet these requirements in general. Instead, criteria not addressed by the language itself should be covered by the coding guidelines.

The coding guidelines subset the language to exclude the dangerous features. They try to enforce low complexity, as well as strong typing. Concurrency is also emphasized. This is not well addressed by current standards, but is addressed well by MISRA C++. MISRA is the most established coding guidelines. It discourages the use of dangerous language features, promotes best practices, and most importantly, avoids undefined behavior.

Testing

While the coding guidelines are great, they do not ensure bug-free code. Testing is the most important part of software safety. ISO 26262 defines a lot of testing that is necessary, including static and dynamic code analysis, formal verification, requirements-based testing, fault injection testing, resource monitoring, and more.

The testing methodologies include analyses of boundary conditions, equivalence classes, functional dependencies, and coverage metrics. Testing should be done on target hardware, and it should be continuously monitored, summarized, and verified in reports.

Lastly, one must remember that creating an environment that ensures these practices are actually followed is critical. Even if it means slowing down product delivery, the proper safety practices must be followed.

Watch the full talk!

Andrew ends his compelling talk by explaining that the reason we do all of this is because it has been shown to work over the years in the automotive industry.

The processes he describes are very effective at detecting issues and delivering safe products. One can argue that the methods are not efficient, but they are highly effective at ensuring safety.

To watch the full talk from Andrew himself, head here.

Otherwise, we wish you the best in operating in line with ISO 26262.